Migration of large data with multiple threads.

As you know, our DBConvert Studio parallel migration function speeds up data migration process between the most popular on-premise databases and cloud-based. This is achieved by dividing the database table data into multiple parts and handling them in parallel. This saves a lot of conversion time.

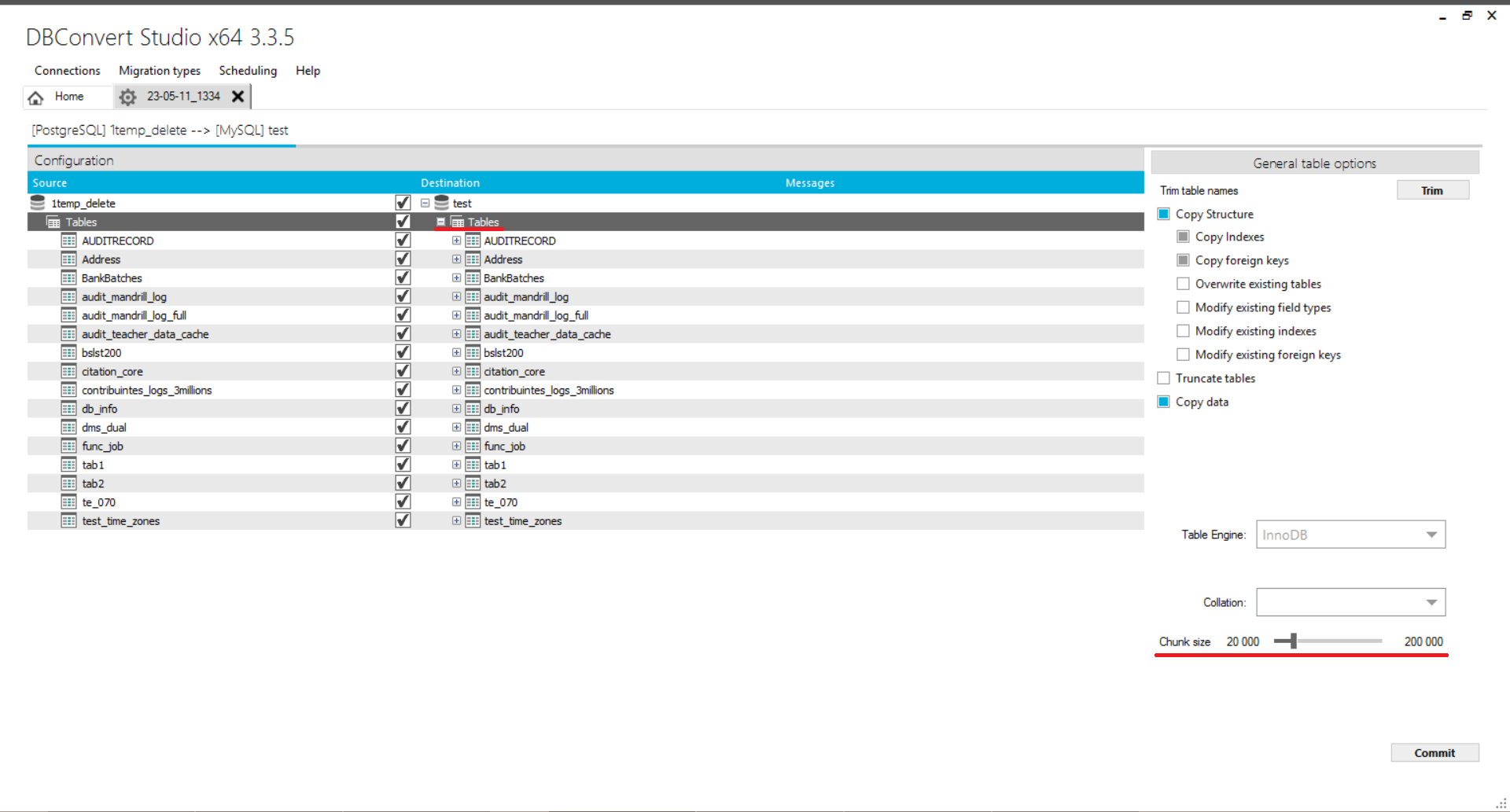

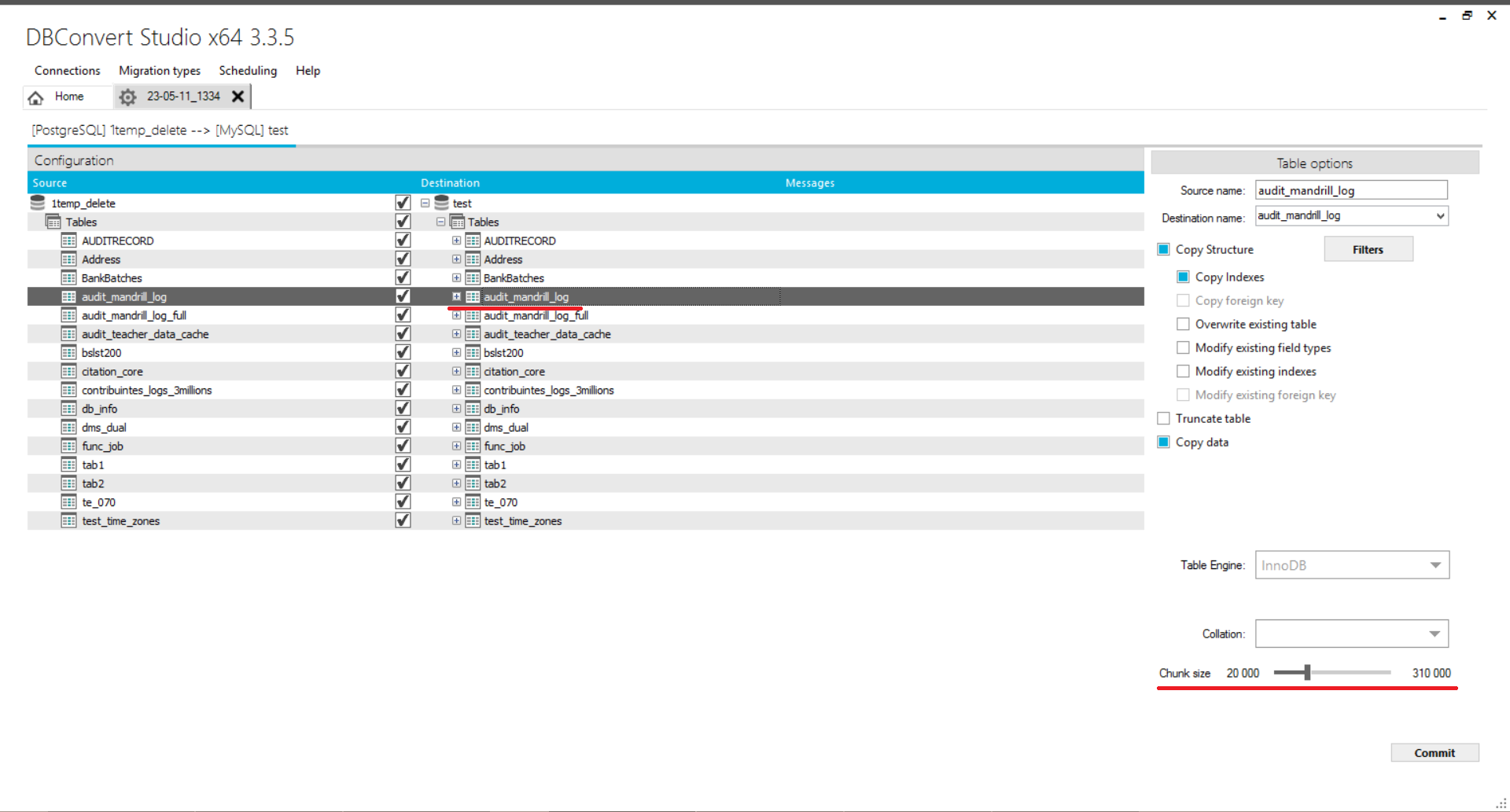

But why not speed up data processing even more when using threads? To get it done use ‘Chunk size’ function. By default chunk size value is set 200 000 records. It means every thread will read and process up to 200 000 records at a time from Source table.

There are several reasons to encourage you to use this feature:

- We recommend using it when there is many data records in one or more tables of database and this table(s) has huge amount of data. To upload the data successfully you will need to reduce the value of the data chunk size for each thread.

- If you encounter errors ‘Query timeout expired’, ‘Out of memory’ or ‘Out of memory for query result’ (depending on the data itself, there may be problems due to the amount of data and the program may give the following errors). You should reduce the chunk size value on any table(s) where you get the errors mentioned above.

The possibility to transfer data by chunk is available both for all Tables and for each table separately.

The slider can be moved back and forth to select a value. To save your selection, click on any other database object in the tree-view, for example, on another table.