Event Driven real-time Replication between MySQL and PostgreSQL.

DBConvert Streams is our new "cloud-first" distributed platform. Benchmark test proves that it takes about 12 seconds to replicate 1 million records between the source and destination database.

Our customers require zero downtime database migration and continuous data replication as workloads move to the clouds, and more data needs to be transferred continuously. Modernizing databases by moving workloads to the cloud requires building real-time data pipelines from legacy systems.

Therefore, in order to meet the needs of our customers, we started developing a brand-new DBConvert Streams, a high-performance cloud-based distributed platform designed to replicate data between databases. Subscribing to CDC (Change Data Capture) streams allows you to react in real time to all data changes in a particular table or in the entire database.

Architecture.

Technically, DBConvert Streams is a microservices architecture-based web application containing traditional source connectors and destination processing units.

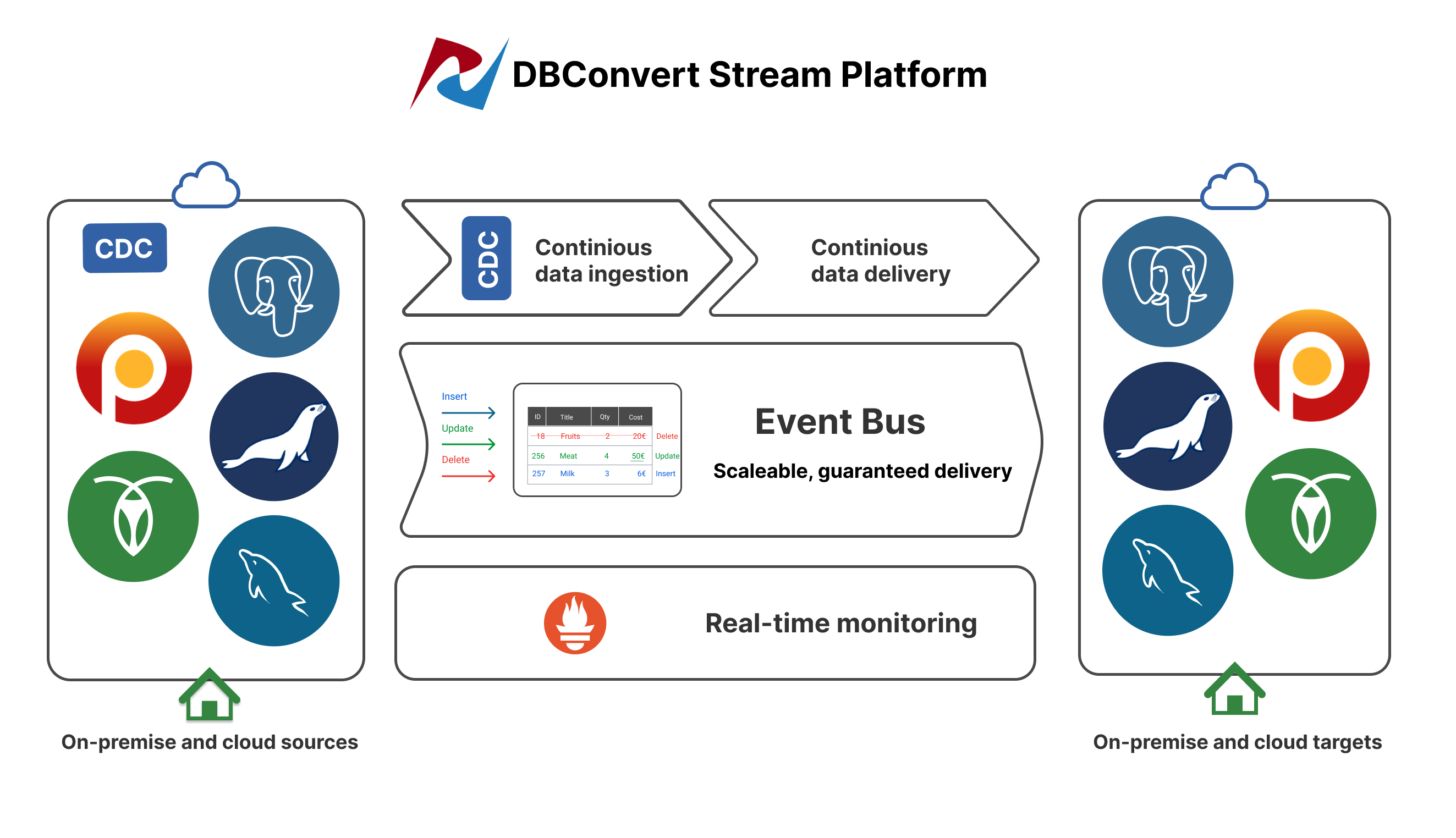

Insert, Update, and Delete Events generated by the database engine are captured on the source side. Our CDC system ingests incoming row-level Stream and publishes it to the Event Bus subsystem.

At the same time, Event Bus delivers a data stream to all consumers (targets) subscribed to the current job.

Multiple target writers handle incoming events from the Event Bus and process them concurrently.

Features.

- Supported Platforms.

DBConvert Streams binaries are available for Linux, Windows, and MacOS. It can also be deployed as Docker containers.

2. Supported databases.

DBConvert Streams currently supports MySQL and PostgreSQL databases.

Sources:

- MySQL Binlog or MariaDB Binlog

- PostgreSQL WAL or CockroachDB WAL

You can choose MySQL or PostgreSQL as the target database.

3. Automatic "CREATE Table" Translation between SQL dialects.

DBConvert Streams automatically converts the CREATE SQL DDL between MySQL and PostgreSQL. Thus, there is no need to worry about manual type conversion of MySQL and Postgres databases. If the destination does not have a suitable table, we will translate the original script into the appropriate dialect and create a new table for you.

4. Consistency and Concurrency.

Unfortunately, distributing a system is usually not an easy task. Consistency is no longer natural, and horizontal scaling becomes more difficult. Distribution of the system requires careful thought.

DBConvert Streams executes the UPDATE and DELETE SQL statements sequentially in the exact order they come from the transaction log.

Multiple INSERT statements are executed simultaneously, greatly speeding up the whole process.

5. Horizontal Scaling of services.

You can run multiple instances of "destination processing service", improving overall performance.

6. Incredible performance.

Replicating INSERT statements for One Million rows takes about 12 seconds. See the next section for more details on tests.

Benchmarks.

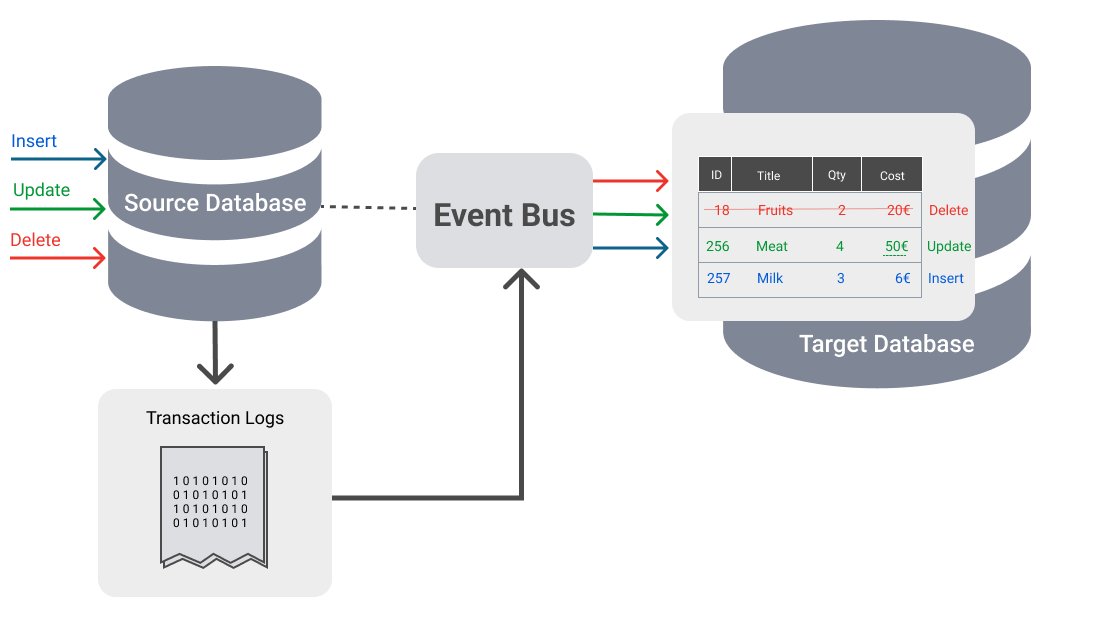

We use Prometheus monitoring toolkit to collect internal metrics provided by DBConvert Streams.

Configuration:

We will use a table with the structure described below for our tests.

CREATE TABLE IF NOT EXISTS public.products

(

id bigint NOT NULL,

name character varying(255) NOT NULL,

price numeric NOT NULL,

weight double precision NOT NULL,

created timestamp without time zone DEFAULT CURRENT_TIMESTAMP,

CONSTRAINT products_pkey PRIMARY KEY (id)

)One source service instance ingests events from the source database side, while three concurrent consumer instances deliver records to the destination database.

To conduct a "real" test of the DBConvert Stream services, we will populate the source database with records and do it as quickly as possible to avoid any performance impact when loading the data. So, we prepared a simple CSV file with 1 million rows for our test. Below are a few lines from the actual CSV file.

id,name,price,weight,created

2,tiNGhsiKmgxICRrbCkLaVEfcAhrfRocgXsYIWSEKw zkMhZCoMVkpNsaaLTilVwuQwiXfHHnbQtVxCCBQONQSgSnPKinCgYqDtRQpjWbuQaWKvgavvVuLxox VKBdrWQyISXGSdWLrc vMRoTVfqEBrjTYzuoicYBbyXSlrYFLWVnYDlhwBnYXWoZJuYwSFdZnQtlgiONoxgrBKxAiwYwRGLssGscnBxso FMXwciWiOArPWgZOIqUMQMxILiF ,16.62780938588609,93744.28095248248,2022-08-14 22:44:23.095632

3,FraAPQocPEKWWtLRIQxPLXNlyBIK zcBvKyliGpbJWgVaqWAbYEiQPEFKGxTKWpadIGZCXvcA AGvMZOFjEHaeEgagJseMVQhuzLmSovlqeeHdZJvyZLperwEVzOZgywdHqnBALpcLVeygCBuFGTsIjoTh gMvtZDaExXSSyWniIwMMETbLcWsuyNLCnmkYzZBIwIDAIhoxCeVHOQdJutvxOXLUMcxLKoiqnUHGZuVSkjiouhe hCiiymoyiFas,89.86627110112526,12166.71221780213,2022-08-14 22:44:23.095652It seems the fastest way to populate a PostgreSQL table is to use the Postgres COPY command.

Connect to the Postgres database and run the COPY command.

psql -h 127.0.0.1 -p 5432 -U postgres postgrespostgres=# COPY products

FROM '/var/lib/postgresql/data/testdata/products.csv'

DELIMITER ',' CSV HEADER;

COPY 1000000Results:

Prometheus shows the following metrics after completing a task:

"source_duration_seconds" metric indicates that it takes about 11 seconds to read 1 million records from the Source Database's Transaction Log file and send them all to the Event Bus.

"duration_duration_seconds" metric is the time from the start of consuming the event bus records to the end of the job when all the records are in place. The figure above shows that it takes about 12 seconds to send all the records to the target database.

As you can see in the picture, each of the three consumer instances processed 1/3 of the total number of records.

So the entire time from start to finish is 12.5 seconds.

Conclusion.

Log-based CDC solutions make it easy for organizations to ingest, process, and deliver real-time data across various environments—in the cloud or on-premises.

Our test shows incredible performance results when used with multiple distributed Destination Processing Service instances.

Find more information about DBConvert Streams and a step-by-step deployment guide.